Powered by MissionSquare

Artificial intelligence (AI) has moved from a distant concept to everyday practice in state and local government. From document automation to resident-facing chatbots, AI is reshaping how public agencies operate, the skills teams need, and how employees experience their work.

MissionSquare Research Institute’s recent survey of 2,000 public employees reveals a nuanced picture: while nearly half of employees already use AI tools at work, attitudes toward these technologies vary widely, influencing morale, productivity, and even retirement planning engagement. This article explores what drives successful adoption, addresses workforce concerns, and offers actionable recommendations for local government leaders.

This article is based on a MissionSquare Research Institute and Morning Consult survey that took place in January 2025 and two resulting reports: “Artificial Intelligence in the Workforce: A Survey of State and Local Employees” and “Artificial Intelligence in Public Workforce: An In-Depth Study on Employee Perspectives and Retirement Planning Implications.”

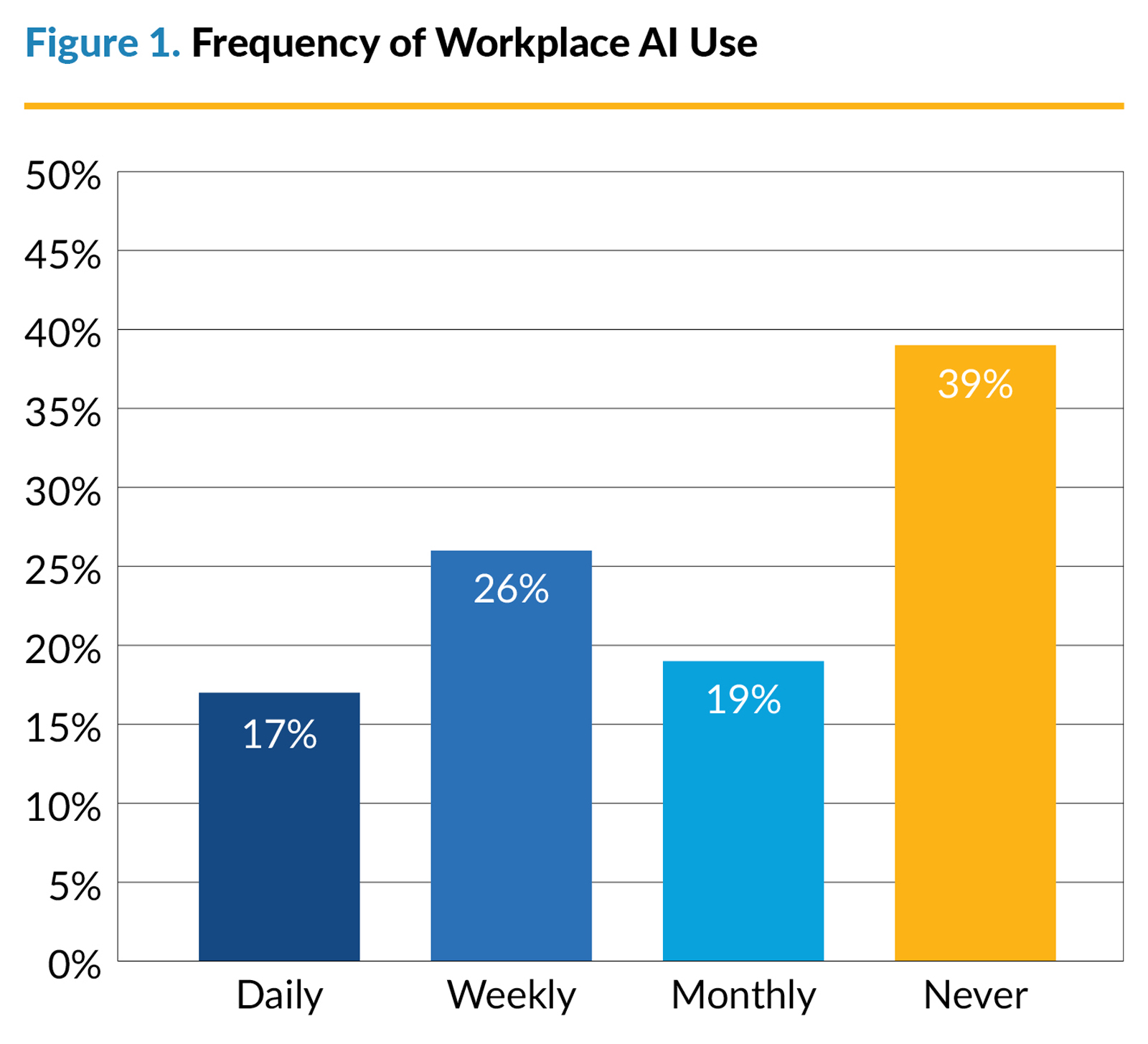

What emerges is a clear pattern: adoption success is not just about the technology’s capabilities; it is fundamentally about people—comfort, preparedness, communication, and confidence in the integrity of outputs. In the survey, nearly half of employees report using AI tools at work (46%), yet only 17% do so daily, suggesting AI is edging toward the mainstream but is not yet fully embedded in day-to-day workflows.

Importantly, the research captures outcomes beyond input metrics: more than half of respondents report improved work quality and productivity, and more than 60% perceive that communities are satisfied with AI-enabled services. Employees also report feeling comparatively knowledgeable about AI—even when formal training lags—highlighting a readiness that leaders can harness with structured programs.

Nevertheless, concerns persist: data security and privacy, output reliability (including the risk of hallucinations), and job displacement fears are salient for many, reinforcing the need for transparent policies, oversight, and human-in-the-loop practices. This study offers a rare, employee-centered lens on what drives adoption, what impedes it, and how leaders can implement AI in ways that lift service quality without eroding trust.

Current State of AI Adoption

AI is being deployed across a range of functions, from automating routine administrative tasks to enhancing resident engagement through chatbots. Departments reporting moderate or advanced adoption often cite improved turnaround times and reduced manual errors as major benefits.

Based on the survey, AI applications in government increasingly fall into two clusters:

1. Internal efficiency tools (e.g., drafting, editing, summarization, routing, and document processing).

2. Resident-facing capabilities (e.g., chatbots for FAQs, appointment scheduling, and translation).

On the resident-facing side, agencies are implementing AI-powered chatbots to handle FAQs and appointment scheduling, and using language translation tools to make public information accessible to non-English-speaking residents. These applications illustrate how AI can streamline operations while improving inclusivity and service delivery.

Crucially, adoption remains uneven. Smaller jurisdictions and those with constrained IT capacity may be slower to implement or may rely on commercially available tools rather than customized solutions. This research suggests implementation pacing matters: moderate adoption correlates with the strongest positive service-quality impacts, while aggressive, advanced rollouts can introduce complexity that dampens outcomes without adequate training and support. This finding argues for measured expansion—pilot, learn, standardize—rather than a wholesale push.

Workforce Impacts and Concerns

Employees report tangible productivity gains—more than half see improved work quality and faster throughput—while communities register satisfaction with AI-enabled services (over 60% ‘very’ or ‘somewhat’ satisfied where assessed). These are encouraging signals that AI can augment public service without sacrificing the human touch.

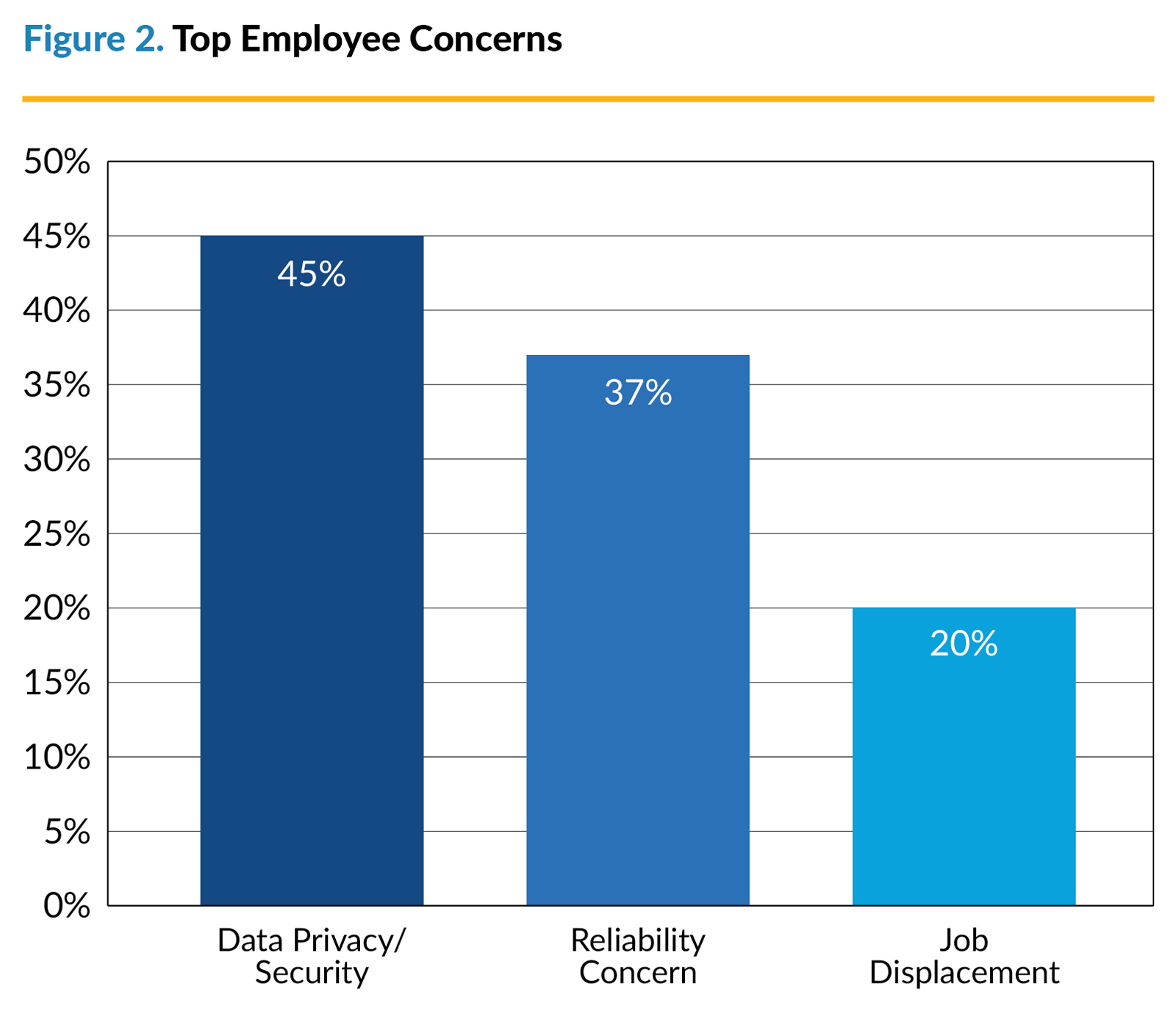

At the same time, employees are candid about risks. Data privacy and security lead concerns (45%), underscoring the responsibility to protect personally identifiable information (PII) and sensitive operational data. Reliability is next (37%), reflecting awareness of hallucinations, potential reliance on irrelevant or out-of-date materials to build the AI tools, and misclassification in predictive models. Job displacement anxiety is not dominant but significant: one in five employees is very or extremely concerned about AI replacing their function, even as 63% express minimal concern.

Concerns map closely to implementation experience. The results of the survey analysis show that morale tracks with preparedness: Employees who feel ‘very prepared’ for AI exhibit three times higher odds of positive morale, while those who feel unprepared or highly anxious about job replacement report markedly lower satisfaction.

For leaders, this translates to two mandates:

- Build preparedness through training, change management, and clear role definitions.

- Address job security fears head-on, explaining how AI reallocates time toward higher-value tasks rather than replacing human judgment.

Transparency—about where AI will be used, how outputs will be reviewed, and how staff will be supported—preempts rumor and builds buy-in.

What Drives Successful Adoption?

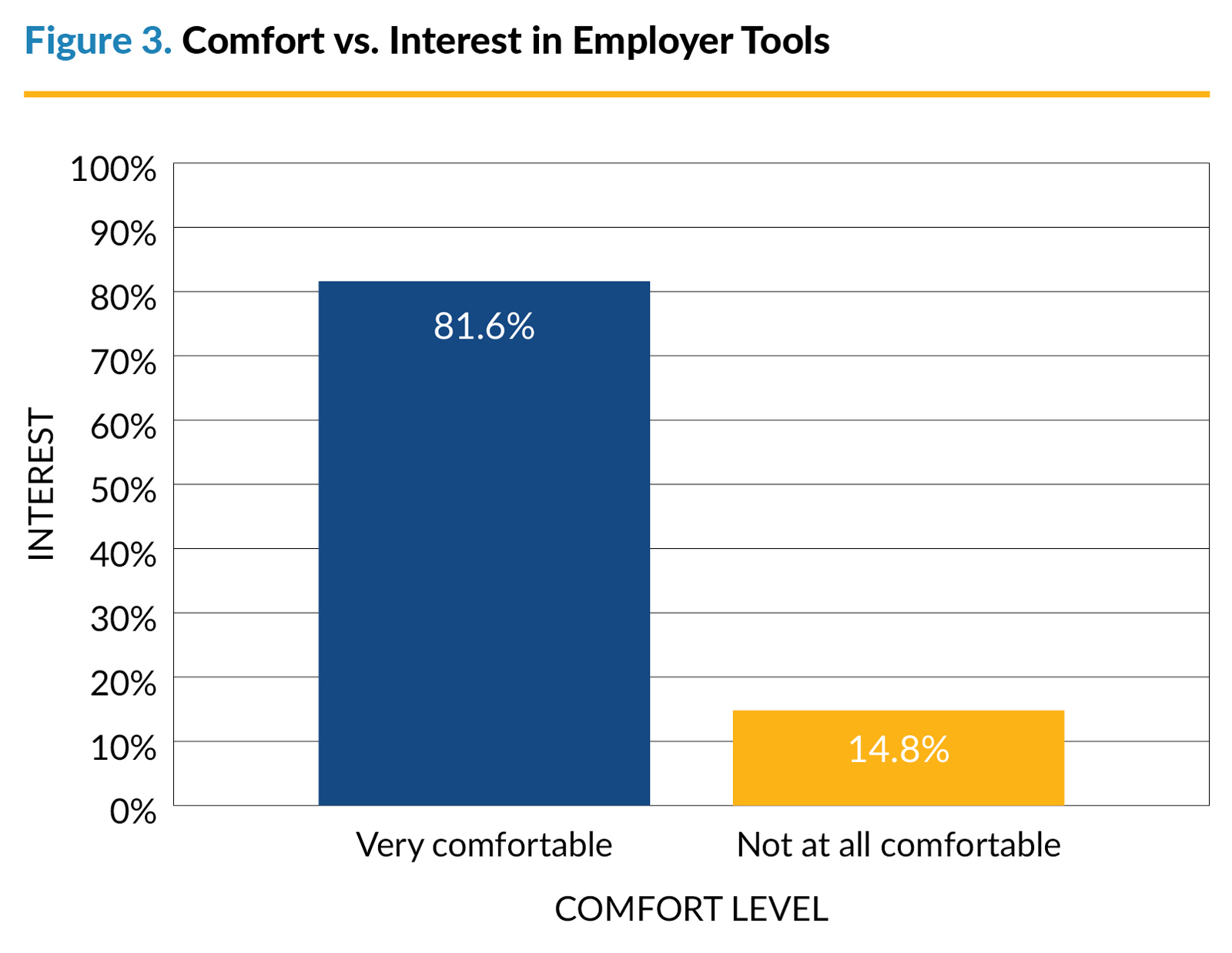

Preparedness and comfort are critical as the adoption is fundamentally behavioral. Among employees who are very comfortable using AI tools, 81.6% expressed interest in applying AI for financial decision-making, compared to only 14.8% among those not at all comfortable. Preparedness amplifies this effect. Employees who are “very prepared” have dramatically higher odds of positive morale and greater openness to new tools. This creates a virtuous cycle: training and clear governance boost comfort; comfort increases adoption; adoption, when supported, improves service quality and morale.

Practical tactics include establishing departmental AI champions, co-developing use policies with staff, and implementing human-in-the-loop review for any outward-facing content or decisions. Leaders should prioritize low-risk, high-yield use cases first—drafting correspondence, summarizing meeting notes, routing documents—before piloting more complex analytics or predictive models. Sequencing matters: MissionSquare Research Institute’s data indicates that moderate implementation tends to yield the best net outcomes, whereas rapid, advanced rollouts can outpace user readiness and support infrastructure.

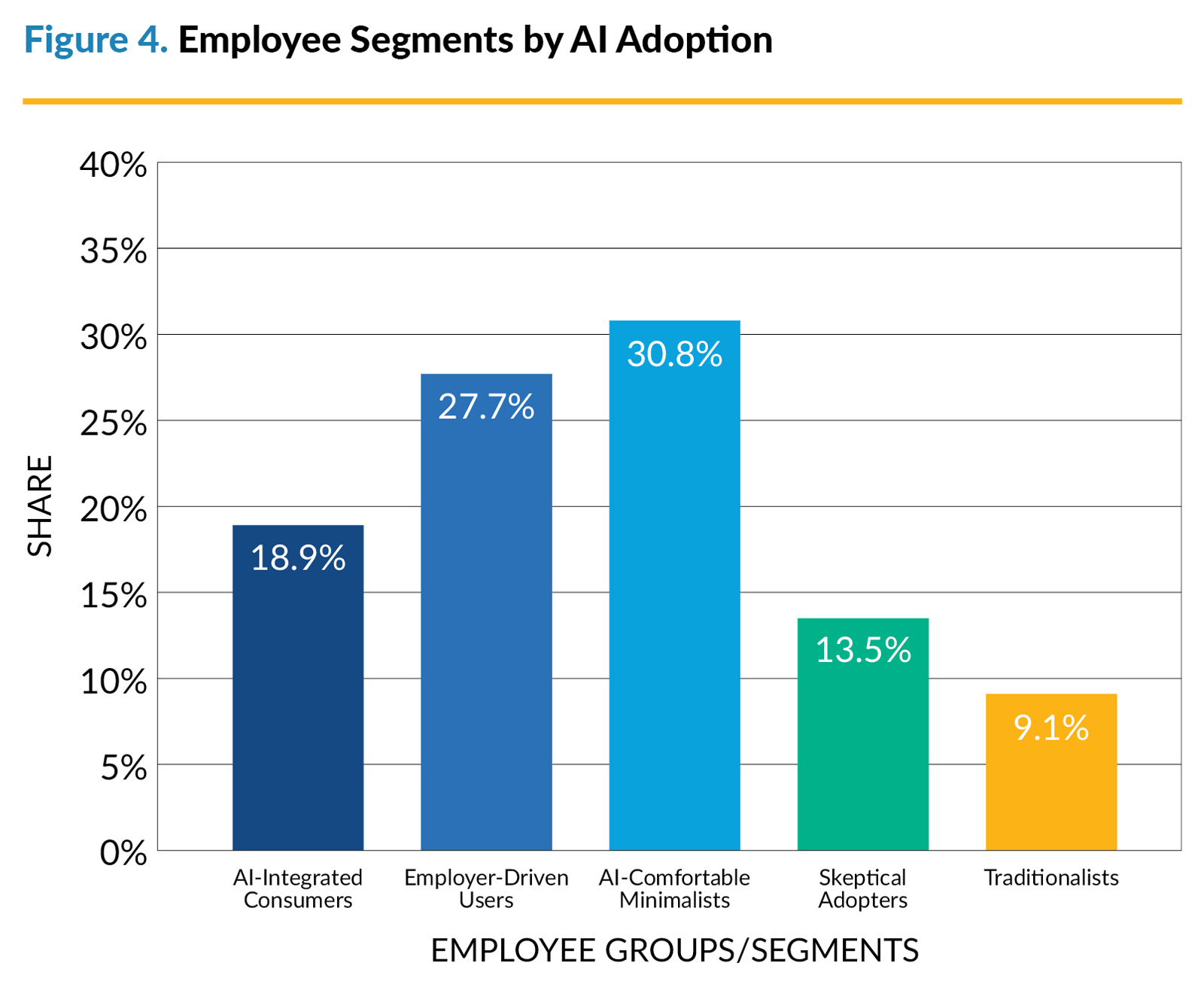

Finally, position AI as a complement, not a replacement. Based on a latent class segmentation analysis from our in-depth study of employee perspectives, early-adopter segments (AI-integrated consumers and employer-driven AI users) show strong usage of both AI tools and human financial professionals, suggesting that technology increases engagement rather than supplanting expertise. Communicating that AI extends capabilities—lowering barriers to information, accelerating routine tasks, and personalizing guidance—helps diffuse zero-sum fears and invites participation.

Beyond the Workplace: AI and Retirement Planning

One consequential insight from this research is the tight coupling between workplace AI familiarity and personal retirement planning engagement. Employees who use AI at work are more than twice as likely to use AI for retirement planning (56.5% vs. 26.2%), and those very comfortable with AI are vastly more interested in employer-sponsored tools (81.6% vs. 14.8%). This pattern reveals an adoption pathway public employers can actively cultivate: build competence and confidence in everyday workplace use, and participation in AI-enabled financial wellness follows.

Why does workplace experience drive personal finance engagement? Familiarity reduces perceived risk. Employees who use AI for drafting, translation, or scheduling see practical reliability—and its limitations—firsthand. That grounded understanding lowers psychological barriers to trying a retirement income estimator or goal-tracking tool. The institute’s segmentation analysis results from the deep dive study make this concrete.

Five distinct groups emerge: AI-integrated consumers (18.9%), employer-driven AI users (27.7%), AI-comfortable minimalists (30.8%), skeptical adopters (13.5%), and traditionalists (9.1%). These segments differ not only in AI usage, but in financial behaviors and preferences. The segmentation analysis highlights five distinct profiles requiring tailored strategies.

AI-integrated consumers show the highest engagement, with 93.6% using AI for retirement planning and 72% working with financial professionals—proof that technology complements human guidance.

Employer-driven AI users favor employer-sponsored tools (83.2%) and thrive with institutional support and secure access.

AI-comfortable minimalists prefer simple, self-service tools like income estimators and progress trackers.

Skeptical adopters need reassurance and gradual exposure, making education and peer testimonials effective.

Traditionalists show minimal interest—12.1% in employer tools and only 2.4% using AI for retirement planning—underscoring the need to maintain non-AI options for inclusivity.

For plan sponsors and employers, the strategy should reflect these distinct needs. Start with tools that have broad appeal (income estimation, goal tracking) and layer more sophisticated capabilities (tax-efficient withdrawal strategies, investment rebalancing suggestions) as confidence grows. Pair feature rollout with communication that emphasizes security, oversight, and complementarity with human advisors. AI cannot and should not replace fiduciary guidance; it should equip participants with personalized insights and nudge them toward informed decisions.

Finally, this study reminds us that technology adoption follows diffusion patterns, not demographic stereotypes. Comfort and confidence—not age or education alone—drive engagement. That is good news: leaders can influence comfort through training, transparent communication, and hands-on experience. By linking workplace use to retirement planning tools, employers create a reinforcing loop of familiarity and value: staff learn AI in low-risk tasks; they apply it to personal financial goals with institutional support; they build confidence that carries back into their work. The result is a workforce more capable of harnessing AI’s benefits across domains.

Conclusion

AI is no longer a distant concept for state and local governments—it’s here, reshaping workflows, service delivery, and even employee expectations. Its success depends on how leaders bring employees along. This research illuminates a possible path: build preparedness, nurture comfort, and implement with care. In practice, that means starting with low-risk applications, communicating transparently, and pairing AI with human expertise.

The link between workplace AI and retirement planning underscores a broader opportunity: when employees experience trustworthy, useful AI on the job, they are more likely to embrace tools that support their financial well-being. In a labor market where recruitment is challenging and retirement eligibility is rising, employers who invest in AI-enabled engagement can improve retention, strengthen benefits utilization, and demonstrate a culture of innovation that attracts talent.

Ultimately, AI is a tool. Its value depends on governance, training, and the people who wield it. The data suggest that public-sector employees are ready to learn and ready to lead—provided they have clear guardrails, reliable support, and a meaningful voice in how AI is applied. That is the promise leaders can fulfill: technology that augments human judgment and expands public service capacity, delivered with integrity and empathy.

ZHIKUN LIU, PhD, CFP® is vice president and head of MissionSquare Research Institute.

References

MissionSquare Research Institute, Artificial Intelligence in the Workforce: A Survey of State and Local Employees (June 2025).

MissionSquare Research Institute, Artificial Intelligence in the Public Workforce: An In-Depth Study on Employee Perspectives and Retirement Planning Implications (September 2025).

MissionSquare Research Institute, News Release: 1 in 5 Public Sector Workers Fear AI Overtaking Their Jobs (June 16, 2025).

MissionSquare Research Institute, 2025 State and Local Workforce Report (July 2025).

New, Reduced Membership Dues

A new, reduced dues rate is available for CAOs/ACAOs, along with additional discounts for those in smaller communities, has been implemented. Learn more and be sure to join or renew today!